Table of Contents

- Introduction

- Lisa Su’s Perspective on the AI Industry’s Trajectory

- AMD’s Strategic Initiatives in AI Development

- Industry Leaders’ Views on the AI Market’s Stability

- The Role of High-Performance Computing in AI Expansion

- Addressing Export Challenges and Global Market Dynamics

- Future Outlook: AMD’s Vision for AI Integration

- Conclusions

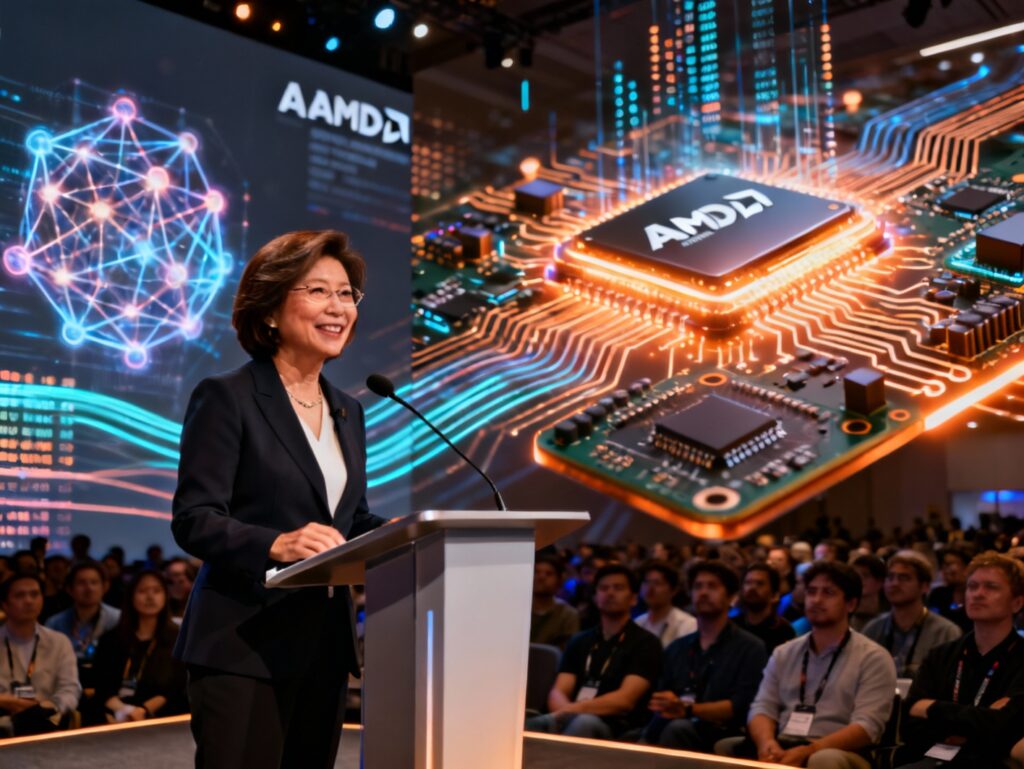

At a recent industry conference, AMD CEO Lisa Su firmly addressed speculation about an artificial intelligence (AI) industry bubble. She asserted that concerns are overblown, emphasizing that AI is still in its nascent phase. Su described the sector as being in the very early days of realizing its true potential, with far-reaching growth on the horizon. Her comments signal AMD’s confidence in AI’s trajectory and their strategic intent to be a key architect in shaping its future. Su’s perspective reflects years of investment and planning, underlining not only AMD’s role in technology innovation but also their belief that AI will continue to evolve across every layer of computing—from edge devices to the data center.

Lisa Su’s Perspective on the AI Industry’s Trajectory

Lisa Su highlighted that the current state of the AI industry mirrors the early chapters of a long journey. According to her, we are at the start of a technological wave that has the potential to transform industries and redefine computing. She noted that although excitement around AI is growing, much of the core infrastructure and software development needed to fully capitalize on AI is still being built. Su stressed that AMD is strategically positioned to become a pivotal player in this transformation.

The future potential of AI, she explained, isn’t limited to enterprises or developers. Rather, she expects AI to permeate various sectors, including healthcare, education, finance, and entertainment. While headlines may focus on generative AI and chatbots, she emphasized that the real value lies in the category’s ability to deeply augment human productivity and decision-making at scale.

Su drew attention to the immense opportunities across the compute stack—from cloud to edge deployments—and positioned AMD as both an enabler and innovator. Her conviction echoes AMD’s long-term R&D strategy and aligns with the broader industry’s shift toward heterogeneous computing, where AI accelerators, CPUs, and GPUs work together to power increasingly complex workloads.

AMD’s Strategic Initiatives in AI Development

AMD has made several targeted strategic moves to solidify its position in the competitive AI market. Among the most notable is its collaboration with OpenAI, placing AMD chips in cloud environments used to train next-generation models. This partnership reflects both AMD’s growing influence in AI compute and OpenAI’s need for scalable, high-performance hardware beyond a single supplier.

Further strengthening its portfolio, AMD acquired several AI-focused companies. Nod.ai brings an array of optimization tools designed to simplify AI model deployment across hardware platforms. By integrating Nod.ai’s compiler technology, AMD can offer developers a faster, more streamlined workflow to unlock the power of their chips. Similarly, the acquisition of Silo AI, the largest private AI lab in the Nordics, adds valuable engineering talent and proprietary tools to AMD’s expanding software ecosystem.

These moves aren’t arbitrary. They align with AMD’s overarching AI roadmap: build a complete end-to-end platform that includes hardware, software, and services. As AI models grow larger and more complex, AMD aims to provide not just raw processing power but also the tools and frameworks needed to chart the AI lifecycle, from data ingestion to inference. With these calculated investments, AMD is weaving an increasingly competitive narrative against incumbents like Nvidia and Intel.

Industry Leaders’ Views on the AI Market’s Stability

Not all voices in the semiconductor and AI industries share Lisa Su’s confidence about the stability of the AI boom. However, there’s a striking convergence in sentiment among industry heavyweights. SK Group Chairman Chey Tae-won, for instance, emphasized the enduring value AI brings to energy, telecommunications, and manufacturing sectors. He highlighted AI’s essential role in enhancing productivity and automating processes, claiming that we have yet to unlock much of its potential. Rather than warning of a bubble, Chey portrayed AI as a long-term structural shift.

Similarly, Nvidia’s CFO Colette Kress has echoed Lisa Su’s optimism. Kress underscored the soaring demand for AI infrastructure and increased enterprise appetite for adopting machine learning solutions. She argued that although revenue spikes could create short-term distortions, the underlying demand is real and backed by fundamental use cases spanning countless verticals. Nvidia, like AMD, considers the AI wave to be sustainable and transformative.

Comparing these viewpoints, it becomes evident that leading semiconductor players view AI not as a hype-fueled trend but as a robust domain with structural longevity. Lisa Su’s assertion that AI is in its early innings seems reinforced by peers who see the space continuing to mature. While market volatility may occur, the consensus suggests a deep-rooted belief in AI’s foundational role in modern computing infrastructure.

The Role of High-Performance Computing in AI Expansion

The explosive growth of AI is underpinned by advances in high-performance computing (HPC), a domain where AMD has long invested. To support the rapidly escalating computational needs of AI workloads, chipmakers must deliver processors that can handle massive parallelism, rapid data throughput, and low-latency operations. AMD’s answer to this challenge lies in its integrated portfolio of CPUs, GPUs, and accelerators designed to work in tandem.

With the rise of transformer models and deep learning networks, the demand for robust training and inference platforms has soared. AMD’s MI300 series, for example, blends CPU cores with GPU enhancements, making it a formidable contender in environments that require large-scale AI training. These chips are crafted for memory efficiency, compute density, and power optimization—essential attributes for AI applications now dealing with billions of parameters.

AMD’s approach hinges not only on hardware but on software optimization as well. Its ROCm software stack enables developers to extract maximum performance from their GPUs, creating a sophisticated alternative to Nvidia’s CUDA ecosystem. By investing heavily in the HPC infrastructure needed to run AI at scale, AMD is setting a foundation that supports innovation across data centers, supercomputers, and enterprise platforms. Through these initiatives, AMD demonstrates that long-term AI leadership depends as much on compute fidelity as on architectural versatility.

Addressing Export Challenges and Global Market Dynamics

Global dynamics surrounding trade and technology are creating new challenges for semiconductor companies, particularly when it comes to AI chip exports. For AMD, navigating export restrictions—especially those implemented by the U.S. government regarding shipments to China—requires strategic foresight. The 15% export tax levied on certain AI chips bound for China has prompted AMD to rethink product offerings and market entry strategies.

Rather than pulling back, AMD is adapting. CEO Lisa Su noted the importance of compliance while simultaneously emphasizing the company’s goal of maintaining global competitiveness. AMD is reportedly developing alternative chip models that satisfy regulatory limitations while still delivering value to international customers. These modified versions offer reduced computational thresholds but maintain capabilities tailored to local AI applications.

At the same time, AMD is increasing its focus on diversification, tapping into growth in regions such as Southeast Asia, India, and Europe. This geographic rebalancing helps mitigate risk from overreliance on any single market and reinforces AMD’s commitment to resilient international expansion. As geopolitical dynamics continue to affect tech policy, AMD’s model illustrates how agile adaptation—and a robust product roadmap—can secure leadership even amid shifting regulatory environments.

Future Outlook: AMD’s Vision for AI Integration

Looking ahead, AMD envisions a future where AI is seamlessly integrated into every tier of its product line. Lisa Su refers to this concept as “pervasive AI”, a strategy aiming to embed AI capabilities across desktops, servers, laptops, and embedded devices. Rather than confining AI to cloud-based processing alone, AMD is working toward distributing intelligent features more broadly across edge and client environments.

This vision emphasizes application diversity. For instance, AI-enhanced laptops equipped with AMD CPUs and GPUs will support real-time translation, audio optimization, and advanced security features—all processed locally without a dependence on the cloud. In data centers, AMD’s chips will enable organizations to scale personalized services and automate analytics with enhanced efficiency.

Moreover, AMD’s investments in software frameworks ensure interoperability and smoother deployment of AI functionality. Through initiatives like open AI model support and compatibility with major machine learning libraries, AMD is lowering the barrier to entry for developers and enterprises alike. By embedding AI as a standard feature, not an add-on, Lisa Su affirms AMD’s goal of transforming how users interact with devices and data. This transformation—from smart edge computing to intelligent workloads in the cloud—defines AMD’s blueprint for making AI not just widespread, but ubiquitous.

Conclusions

Lisa Su’s firm stand against the idea of an AI industry bubble reflects AMD’s strategic depth and long-term vision. Positioned at the intersection of AI innovation and advanced computing, AMD is not merely reacting to trends but shaping the foundations of the AI revolution. Through targeted acquisitions, calculated partnerships, and continuous product innovation, the company is building a diversified ecosystem poised to benefit from sustained growth in AI adoption. As AI becomes increasingly embedded in everyday technologies, AMD’s focus on high-performance infrastructure and software integration ensures it remains a central player. By continuously adapting to global market challenges and regulatory constraints, AMD’s path forward remains future-oriented, asserting its belief that AI is not a passing phase but a transformative force with years of growth ahead.