Table of Contents

- Introduction

- The Genesis of the OpenAI-Jony Ive Collaboration

- Design Philosophy: Embracing Simplicity and Intuition

- Technical Innovations: A Screenless, Context-Aware Experience

- Overcoming Challenges: Privacy, Computing Power, and Design Hurdles

- Market Positioning: A ‘Third Core Device’ in the Tech Ecosystem

- Future Prospects: Anticipated Launch and Industry Implications

- Conclusions

OpenAI has partnered with legendary designer Jony Ive to create a revolutionary new AI device that promises to redefine the way humans interact with artificial intelligence. Rather than building another smartphone or wearable, their vision revolves around a screenless, intuitive, and context-aware assistant that seamlessly blends into our daily lives. This prototype isn’t just a gadget—it’s an effort to rethink the entire paradigm of how technology should serve us. Together, OpenAI’s leadership in artificial intelligence and Ive’s mastery in design aim to deliver a device that removes distractions while increasing utility, creating a new kind of human-centric computing experience.

The Genesis of the OpenAI-Jony Ive Collaboration

The roots of the collaboration between OpenAI and Jony Ive lie in a shared belief: the current trajectory of consumer technology no longer prioritizes user well-being. Ive, widely known for designing many of Apple’s most iconic products, had been seeking to explore a new kind of device—one less focused on screens and more on presence. Around the same time, OpenAI recognized that AI would soon reach a point where it could intelligently understand users’ real-world contexts, but lacked a dedicated physical form.

When Sam Altman, CEO of OpenAI, and Jony Ive began discussions, they quickly discovered common ground. Both envisioned a non-intrusive, ambient computing device that could complement daily life rather than complicate it. Their early conversations focused not on specifications, but on philosophy—rethinking computing as a utility rather than a centerpiece. The core concept was to embed AI into an object that fades into the background, yet becomes responsive when needed. From these early ideations, the project took shape with the goal of creating a device that could understand human intent with minimal input and offer quiet companionship throughout the day. This vision laid the foundation for the direction, tone and user experience goals of the prototype.

Design Philosophy: Embracing Simplicity and Intuition

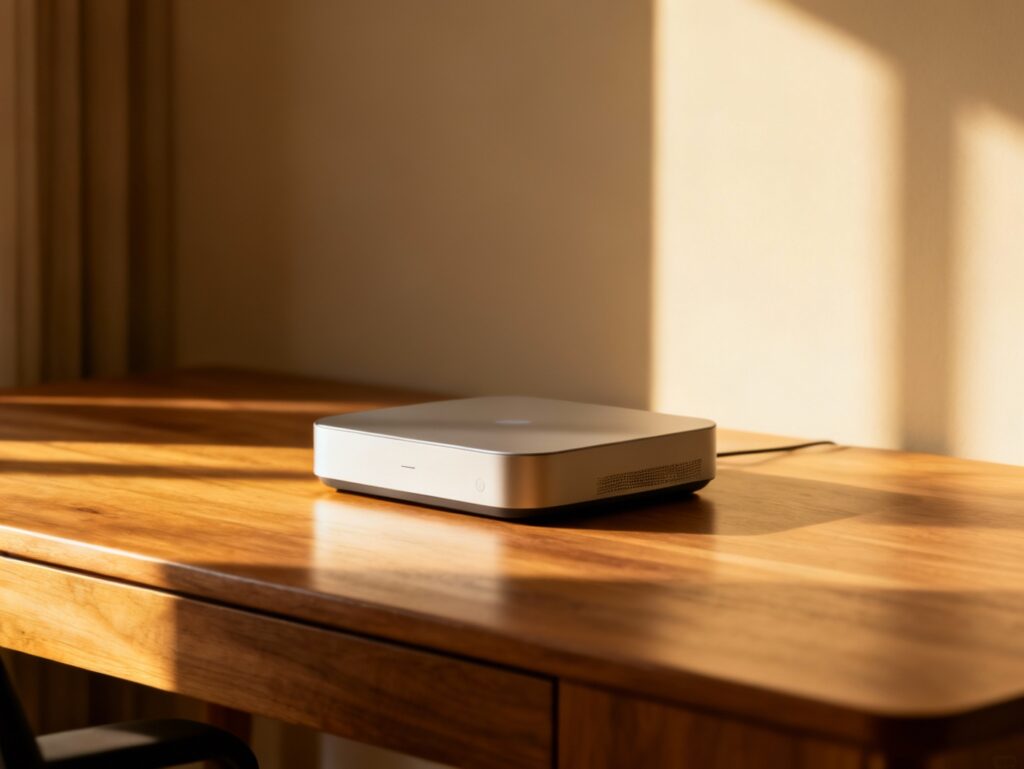

Jony Ive’s influence on product design is marked by simplicity, elegance, and human focus—principles that deeply shape this AI device. Unlike conventional tech that often clamors for attention through screens, notifications, and constant interaction, Ive’s design emphasizes discretion and seamlessness. The goal is not to add another screen to your life, but to reduce the digital noise by replacing it with intelligence that is gently present.

Ive wishes to create a device that people forget they even use—because it simply works in the background. The design is clean, devoid of unnecessary embellishments, and incorporates tactile forms that feel familiar yet futuristic. Every element has a function, with an absence of clutter both physically and mentally. This approach runs counter to current trends in technology, where maximized screen time and feature creep often dominate product innovation.

Instead of demanding user attention, this device aims to adapt to human routines and preferences. The interplay of minimalism and function is central to its industrial design, fostering what Ive describes as a non-invasive companion. In practice, this means interactions occur naturally—through voice, movement, or subtle cues—instead of commanding hard taps or visual engagement. Ive’s philosophy introduces a refreshing alternative to the overstimulating design language of today’s devices.

Technical Innovations: A Screenless, Context-Aware Experience

At the heart of this AI device lies a technical framework designed for ambient intelligence. One of its standout features is its screenless form, which reinforces its commitment to reducing visual distractions. Instead of presenting information visually, it uses a combination of microphones, cameras, and a sophisticated AI brain for contextual understanding, enabling it to interpret a user’s surroundings, tone of voice, and even habitual patterns.

The microphones are always passively listening for relevant triggers, while on-board cameras interpret environmental cues—such as identifying objects, spatial layouts, and movements. These inputs are processed locally and in the cloud to protect privacy while enhancing responsiveness. The AI engine synthesizes this information to provide situational awareness, offering users assistance based on what they’re doing rather than what they tell it.

Using advances in natural language processing and multimodal AI, the device can discern when to speak, how much to say, and even how to tailor its tone to the user’s current mood. For example, if someone appears stressed during a conversation, the AI might choose to defer non-urgent alerts. This adaptive behavior bridges a major gap between reactive and proactive AI.

The advanced hardware-software integration results in a level of user experience that feels intuitive, intelligent, and uniquely attuned to individual preferences—without placing another light-emitting block in their hands.

Overcoming Challenges: Privacy, Computing Power, and Design Hurdles

Building this new AI device has not been without its difficulties. One of the primary challenges was ensuring user privacy given its use of microphones and cameras. To address concerns, the team developed a layered security model: much of the data processing happens on-device, encrypting sensitive information and enabling real-time responsiveness while significantly minimizing data transmission to external servers.

Another hurdle was optimizing computing power within a minimalist form factor. Unlike smartphones or wearables with screen interfaces to guide usage, this device had to rely purely on intelligence and sensors. This meant integrating powerful silicon with low energy consumption, balanced by writing more efficient algorithms that could work with limited resources during passive standby and active states.

Aesthetically and functionally, the challenge of embedding so much technology into an object with no visible interaction points was considerable. The team experimented with various materials, internal layouts, and passive heat dissipation designs to support long-term reliability and user comfort. Ive’s obsession with craftsmanship pushed the boundaries of compact hardware engineering while maintaining the elegance of the product’s form.

The final prototype is a triumph of engineering synergy—technology and design pushed to coexist organically. Its development demanded iterative problem-solving, interdisciplinary innovation, and persistent reevaluation of what a personal AI assistant should look and feel like.

Market Positioning: A ‘Third Core Device’ in the Tech Ecosystem

OpenAI and Jony Ive don’t view this AI device as a replacement for smartphones or computers, but rather as a ‘third core device’—a new category that fills the experiential gaps between mobile and desktop computing. Its purpose is to carry ambient intelligence alongside users throughout the day, gently enhancing awareness, productivity, and well-being without inviting an avalanche of alerts, apps, or distractions.

With this positioning, the device connects the dots between passive assistance currently found in wearables and the deep computing capability of laptops. It focuses on micro-interactions and intelligent suggestions, freeing users from constant screen engagement. For example, it might remind users of a meeting when it senses they’re getting ready to leave, deliver softly spoken updates, or offer conversational insights in quieter moments.

In the competitive tech market, such a product could fill an entirely new niche—appealing to users overwhelmed by traditional devices and companies seeking more natural integration of AI in employee lifestyles. Businesses might adopt it for subtle workplace assistance, while individuals could embrace it for enhancing focus and wellness. This emerging role sets it apart and positions it as a strategic complement to devices we already carry—gracefully filling the void we’ve come to call digital fatigue.

Future Prospects: Anticipated Launch and Industry Implications

The prototype AI device is anticipated to launch within the next two years, putting it on track to introduce a new interface paradigm before the middle of the decade. As development progresses toward production, OpenAI and its partners are focused on refining user experience and further tightening hardware-AI integration. Internal testing and limited trials are expected to commence ahead of a broader rollout, potentially starting with developers and early adopters.

The implications of this launch stretch beyond the product itself. It signals a shift toward sensitive, situational computing where interfaces dissolve into the background. By prioritizing human-centered design and minimizing friction, this device may influence how future technologies embed into our lives—invisibly but meaningfully.

Developers, designers, and AI researchers are already taking note of the philosophical and technical shifts this project embraces. The ecosystem around such a device could spawn a new generation of software focused on ambient utility rather than screen-based interaction. If successful, this launch could serve as the catalyst for a new computing era—one built not on more features, but smarter presence.

Conclusions

The collaboration between OpenAI and Jony Ive represents more than the creation of a new device—it marks the emergence of a new relationship between humans and machines. By focusing on subtlety, context-awareness, and simplicity, this AI device reimagines how technology can exist in our lives without intruding on them. It’s not the louder, flashier gadget that commands attention, but the silent intelligence that enhances our everyday routine.

If the vision holds true through production and adoption, this device could begin a movement away from dependency on screens and toward more intentional, effortless interaction with technology. The impact could carry over to future innovations, pushing the industry to reconsider what it means for a device to truly serve the user. In doing so, OpenAI and Ive may not just reshape AI hardware—they may reshape our expectations of tech itself.